Last updated February 2026

This is an overview of AI tools, including Large Language Models (LLMs) such as ChatGPT, Claude, Gemini, and Deepseek, and their relevance to teaching and assessment in higher education. It is necessarily in flux, as the capabilities of LLMs are changing rapidly and new controversies are emerging.

This page, like the rest of this site, is curated manually, without the use of any LLMs.

Events about AI in economics

Keep an eye on our events calendar; Ai is a common topic of online seminars

AI was a theme in many sessions at the DEE Conference 2025 and other events.

The Economics Network hosted a free online symposium on 22 January 2025. Chaired by Prof. Carlos Cortinhas, it brought together leading experts to explore the opportunities and challenges AI presents, focusing on responsibility, equity, and integrity. The full video from the session is now online.

The Economics Network previously hosted an online seminar on Assessment and AI on 29 February 2024, chaired by Prof. Dimitra Petropoulou. Prof. Alvin Birdi gave an overview of AI's capabilities and the controversy about its use, and Prof. Carlos Cortinhas reported on a survey on use of AI in higher education. Follow the link for lightly edited video as well as slides and links.

Midjourney's response to "A profile photo of an Economics professor" (click to enlarge). Midjourney is a generative AI program trained on hundreds of millions of images from the web. Large datasets can have biases or gaps which are reflected in stereotypical features in its output.

Midjourney's response to "A profile photo of an Economics professor" (click to enlarge). Midjourney is a generative AI program trained on hundreds of millions of images from the web. Large datasets can have biases or gaps which are reflected in stereotypical features in its output.RES/ CTaLE/ The Stone centre report

The report "Rethinking Economics Assessments for a GenAI World" was published in October and is freely downloadable. A collaboration between the Royal Economic Society, CTaLE, and the Stone Centre at UCL, it reports multiple lines of evidence on the impact of GenAI on higher education, its use by students and by employers, and how economics academics are adapting their assessments.

Course on AI in education

FutureLearn ran an online course, "AI in Education", from 6 May 2025 onwards. Staff at some universities will have cost-free access to the materials.

Background

- Although one family of LLM-driven chatbots, ChatGPT, initially attracted most attention, there are multiple LLMs with similar capabilities. LLMs can drive other software and devices and are rapidly being integrated into tools like search engines, social media, office applications,(ref 8) or text messaging apps. Hence one can be "using" an LLM in increasingly many situations.

- Although the initial output of an LLM has a recognisable style, the default behaviour can be altered in several ways. One can give the model conversational feedback, tell it to adopt a persona, or feed its output into other AI tools.

- LLMs have prompted a lot of discussion about whether and how Higher Education needs to adapt. In the context of assessment, there are concerns about sophisticated cheating and the generation of pseudo-information, (termed "hallucination"). There is also discussion about whether LLMs will assist learning (for students in general or for some kinds of student), whether they can assist educators, and about whether universities need to prepare students for workplaces that in many cases will use LLMs.

Capabilities

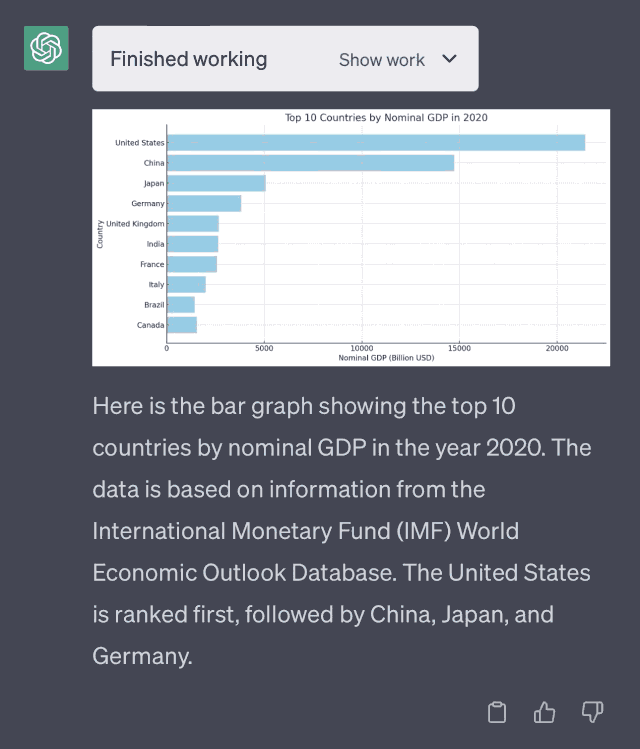

GPT-4's code interpreter retrieving and plotting GDP data from a public interface

GPT-4's code interpreter retrieving and plotting GDP data from a public interfaceNot all LLMs have the same capabilities. Even the functionality of ChatGPT differs between the paid and free versions and depends on what plugins are available to each user.

There are many companies providing chatbots, each of which come in multiple versions. Each can create and manipulate text, images, and code but there are differences between them. (ref 18)

The brand name "ChatGPT", "Gemini", "Claude" or "DeepSeek" is not on its own a good indicator of the capabilities of the chatbot. Each of these exists in multiple versions with widely varying capabilities. Most of these bots run online; some can be downloaded to a personal computer or phone and run from there.

In at least some cases, LLMs can:

- Generate writing in a given style, including writing at a specific educational level or introducing deliberate errors.

- Convert between writing styles, e.g. from bullet-point list to narrative essay or vice versa; casual to academic style or vice versa. (ref 9)

- Summarise or analyse existing documents, for example to extract common themes or to suggest improvements. Chatbots can only process a given amount of text at a time (their context window), and this is often a limiting factor, but they are progressing to where they can take entire books as input.

- Summarising or analyse videos, describing what happens in them or pointing to significant moments. Even more so than with text documents, the context window can be a limiting factor.

- Generate code, including comments, from a verbal description, in languages including R, Matlab, Python, and Excel macros. (ref 1) It has difficulty writing Stata code but is much more capable with other languages. (ref 2)

- Generate entire slide shows from short text prompts. For PowerPoint, Google Slides, Prezi, and Canva there are AI-driven plugins that add this function. Results vary in quality, and at the very least some human cleanup is necessary. Even general-purpose chatbots that cannot create Powerpoint files, such as ChatGPT, can generate lists of bullet points as a basis for presentation slides.

- Look up information on the internet by connecting to external services such as search engines or Wolfram|Alpha. (ref 3)

- Analyse an Excel data set, visualise the data, suggest hypotheses that can be tested with the data, conduct regressions and report the results in natural language. (ref 7) Create static charts or animated or interactive visualisations to summarise a data set.

- Score more highly than most human students on some exams. GPT-4 performs very differently on different kinds of exam involving mathematics. It gets a 5 (the highest score) on Advanced Placement exams in Microeconomics, Macroeconomics, and Statistics. On the SAT Math test used in the US, it scores above 89% of students. (ref 4) On American Mathematics Competition exams, it scores around the median on the AMC12 and in the bottom 12% on the AMC10. (ref 5)

- A report published by Meta (the creators of the LLAMA family of LLMs) on 23 July 2024 compares seven LLMs (three versions of LLAMA 3 plus four competitors) on a variety of exams (p.36). They find a slight advantage for LLAMA models with macroeconomics and a slight advantage for Chat GPT 4o and Claude Sonnet with microeconomics. Performance for these latest models is near ceiling whereas ChatGPT 3.5's performance is far lower.

- Help students with mathematical questions from the SAT exam (e.g. Solving for two unknowns with two constraints; Identifying the missing measurement from an average) by generating custom explanations.

- Watch a screen-capture video of someone working at a computer, describe what they did, and advise on more efficient ways of working. (ref 20)

- The more recent generation of LLMs, starting in September 2024, "think aloud" about the problems they are presented with. This presents difficulties if, to distinguish human work from AI work, you ask for a description of the thought process that led to the answer. (ref 22) Tyler Cowen asks OpenAI o1 a complex question about economics in this short promotional video from OpenAI.

- NotebookLM is an interface to Google Gemini, tailored for note-taking. Given an audio recording of a lecture, a long text, or a set of slides from Google Slides, it can digest them down into notes or expand existing notes with more detail. In addition to text, the output can be a podcast-like audio with AI-generated voices. The bot can hold text conversations about the contents of documents, and can generate questions and answers, or study guides, relating to the documents' content.

Positive uses of AI in education

- The paper "ChatGPT as economics tutor: Capabilities and limitations" by Bröse, Spielmann, and Tode (2026) examines the abilities of three versions of ChatGPT to act as individual tutors in economics. They report that "while the models can generate examples, they often lack the depth and relevance necessary to effectively aid student understanding in economics," and identified a "lack of a holistic perspective [which] means that students may miss critical aspects, making it harder to apply concepts to the real-world," but conclude that "ChatGPT can serve as an effective automated tutor for basic, knowledge-based questions, supporting students while posing a manageable risk of misinformation."

- The pre-print "Protecting Human Cognition in the Age of AI" by Singh et al. (2025) observes that deep learning involves a phase of perplexity, doubt, and "mental unrest" as students' existing ideas are challenged and they encounter uncertainty. Chatbots can "save" students from this experience by providing reassuring answers immediately. Thus they can be very damaging in the early stage of the learning process, but engagement with AI later in the course can help to build reflection and critical/ evaluative skills.

- Some students are using chatbots to fix mismatches between how they are taught and how they want to learn. Prompts include "Create and image to help me visualise [concept]," "I want to learn by teaching. Ask me questions about [topic] so I can practice explaining the core concepts to you," "Decode this dense passage into language I can understand."

- Two webinars in the Princeton University Bendheim Center for Finance series address how AI tools can help (or harm) economics education. They can both be viewed on YouTube. Justin Wolfers of the University of Michigan (October 2024) discussed AI's use in personalising the experience of learners and teachers. Kevin Bryan of the University of Toronto (December 2024) warned of the harm of using "raw" models and argued that benefits of personalised education come from AI tools specific to the task.

- Jana Sadeh and Christian Kellner have experimented with a custom chatbot to help students choose optional economics modules. In their case study, they describe the AI capabilities as not quite production-ready and have released the service alongside disclaimers and additional information sources.

- In "Generative AI in Economics: Teaching Economics and AI Literacy", Sedefka Beck and Donka Brodersen describe getting their students to critique the output of ChatGPT.

- "This approach allows students to practice responsible use of GenAI, observe its shortcomings, and develop critical thinking skills while learning how to evaluate and refine AI-generated solutions."

Some suggestions of "What ChatGPT is good at" from Alvin Birdi's February 2024 presentation:

- Feedback on paper drafts

- Providing counterarguments

- Improving writing

- Synthesising text from bullet points

- Editing text

- Evaluating text (lack of clarity, passive voice, structure etc) → feedback

- Acting as a tutor for concepts

- Brainstorming ideas and examples related to a theme → lead to homogeneity?

- Drafting assessments that do or do not make use of ChatGPT/AI

- Drafting assessments that maintain integrity

- Initial drafts for teaching plans/lectures

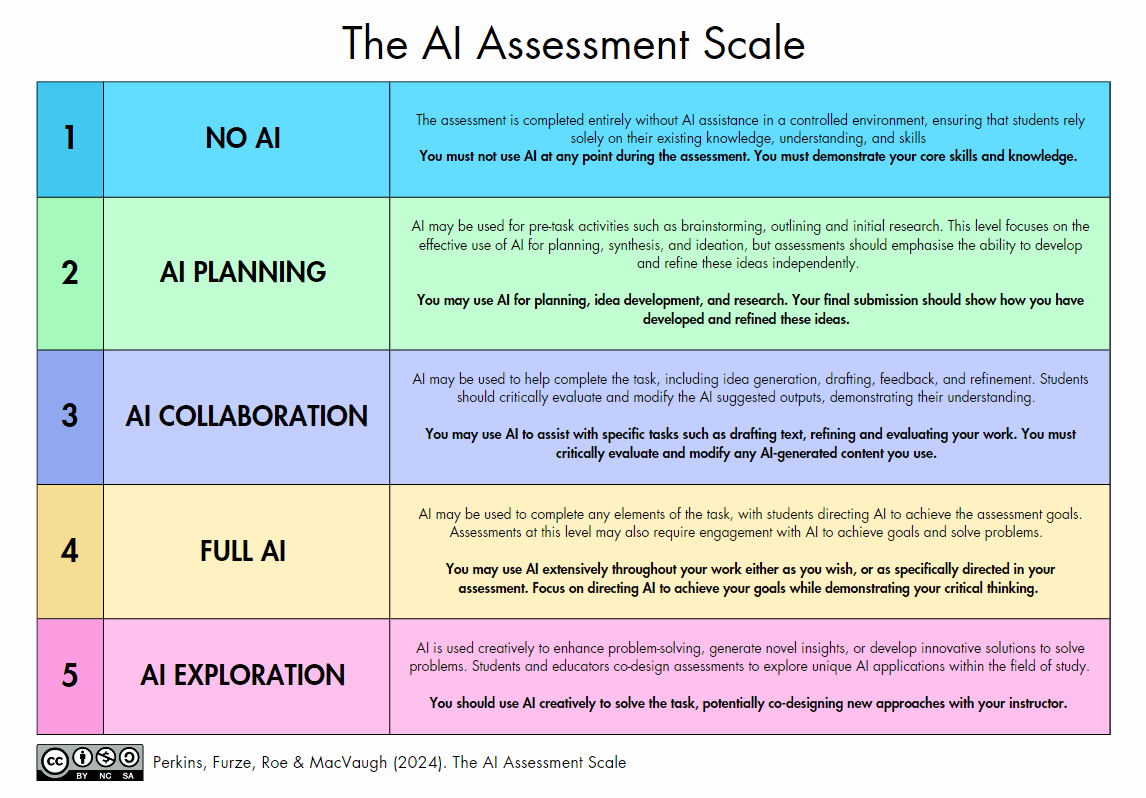

- Some educators are using "traffic light" systems to indicate whether, in the context of a given assessment, AI is banned, permitted in a limited form, or positively encouraged. An example is the AI Assessment scale (click on the image to expand):

- AI in education (actionable insights for educators), dated 16 July 2023, is one of a suite of reports produced by Warwick University.

- UCL's guidance for tutors and students on AI distinguishes tasks where AI is forbidden from those where it is allowed as an assistive tool and those where it can be integral to assessment tasks.

- Alice C. Evans of KCL encourages student use of an AI chatbot in a course on development economics ("How Can Professors Prevent Plagiarism in a World of ChatGPT?", published May 2024)

- "'What’s the primary reason why East Asia got rich?', 'Why is violence so high in Latin America?' Write an essay answering one of these questions, then ask Claude to highlight the limitations. Improve the essay, provide better evidence, and submit along with your conversation with Claude."

- The WEF report "Shaping the Future of Learning: The Role of AI in Education 4.0", published April 2024, identifies four areas in which AI could have a positive effect on education:

- 1) Personalized learning content and experiences; 2) Refined assessment and decision-making processes; 3) Augmentation and automation of educator tasks; 4) Integration of AI into educational curricula (teaching with and about AI)

- Creative Storytelling in Economics with Lego and AI by Swati Virmani, published February 2024 in the Economics Network Ideas Bank

- "This drove me to experiment with the concept of composing a complete storyboard, directing an AI tool to define a term, write the scenes/sequences, and subsequently transform each scene into a descriptive image."

- Generative AI and accessibility in education by Helen Nicholson for Jisc Involve, October 2023

- "[G]enerative AI [can] offer a range of tools to help neurodivergent users with tasks they may struggle with, including estimating the tone of text, changing the tone of their own writing and estimating how long a given task might take to complete."

- "[T]he availability of this technology should never be used as an excuse to not provide students with the necessary accommodations for their needs. For instance, the burden shouldn’t be on students to change the format of their course content so that it is accessible, it remains on institutions to provide accessible documents."

- Language models and AI in economic education: Unpacking the risks and opportunities presentation slides from DEE Conference 2023 by Tomasz Kopczewski and Ewa Weychert

- "Change narratives about AI: Passive use of generative AI is the first step to unemployment. You must be a 'critical miner' of generative AI. Don't stop at acquiring the ore (information) - turn it into knowledge and share it with others. Your even imperfect interpretation of information is needed to enhance diversity and, thus, collective knowledge."

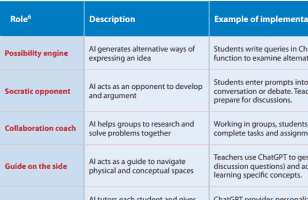

- Assigning AI: Seven Approaches for Students, with Prompts by Ethan R. Mollick and Lilach Mollick, updated 23 September 2023

- "The authors propose seven approaches for utilizing AI in classrooms: AI-tutor, AI-coach, AI-mentor, AI-teammate, AI-tool, AI-simulator, and AI-student, each with distinct pedagogical benefits and risks. The aim is to help students learn with and about AI, with practical strategies designed to mitigate risks such as complacency about the AI’s output, errors, and biases."

- What Should Data Science Education Do with Large Language Models? by Xinming Tu et al., 7 July 2023 (preprint)

- "With the assistance of LLMs, data scientists can shift their focus towards higher-level tasks, such as designing questions and managing projects, effectively transitioning into roles similar to product managers."

- "LLMs can assist educators in designing dynamic and engaging curricula, generating contextually relevant examples, exercises, and explanations that help students grasp complex concepts with greater ease."

Some suggested uses of ChatGPT in education in the UNESCO guideChatGPT and Artificial Intelligence in higher education: Quick start guide by UNESCO International Institute for Higher Education in Latin America and the Caribbean, April 2023.

- "Due to its ability to generate and assess information, ChatGPT can play a range of roles in teaching and learning processes. Together with other forms of AI, ChatGPT could improve the process and experience of learning for students."

- See also the UNESCO document "Guidance for generative AI in education and research", issued 7 September 2023

Using AI to Implement Effective Teaching Strategies in Classrooms: Five Strategies, Including Prompts by Ethan R. Mollick and Lilach Mollick, 17 March 2023

- "Many teaching techniques have proven value but are hard to put into practice because they are time-consuming for overworked instructors to apply. With the help of AI, however, these techniques are more accessible."

- "[I]ntentionally implementing teaching strategies with the help of an LLM can be a force multiplier for instructors and provide students with extremely useful material that is hard to generate."

- ChatGPT is the push higher education needs to rethink assessment by Sioux McKenna et al., The Conversation, 12 March 2023

- "ChatGPT can be used to support essay writing and to help foster a sense of mastery and autonomy. Students can analyse ChatGPT responses to note how the software has drawn from multiple sources and to identify flaws in the ChatGPT responses which would need their attention."

- ChatGPT as a teaching tool, not a cheating tool by Jennifer Rose, Times Higher Education, 21 February 2023

- "One way that ChatGPT answers can be used in class is by asking students to compare what they have written with a ChatGPT answer. [...] This dialogic approach develops the higher-order thinking skills that will keep our students ahead of AI technology."

- Some initial lessons from using ChatGPT and what I will tell my Macroeconomics students by Stefania Paredes Fuentes, University of Warwick, January 2023

- "Artificial Intelligence tools are not going to disappear, and they are going to change the way we learn (and hopefully teach)."

- "Rather than 'banning' the use of ChatGPT [...], let’s engage with a conversation with students regarding the limitations of this technology but also on ways to use it."

Student use of AI

- HEPI/Kortext AI survey shows explosive increase in the use of generative AI tools by students. Report by Higher Education Policy Institute, February 2025

- "The proportion of students using generative AI tools such as ChatGPT for assessments has jumped from 53% last year to 88% this year. The most common uses are for generative AI to explain concepts, summarise articles and suggest research ideas. … Just under half of students (45%) said they had used AI at school."

- The Future of Learning: Student’s Opinions on AI. Report by a student at the University of Bristol, reporting survey and focus group findings of students' opinions on the use of AI in education, published at the start of 2025

- Padlet from the Economics Network Virtual Symposium session on Assessment and AI, 29 February 2024

- ChatGPT for students with Dyslexia? DyStIncT magazine, 19 February 2023

- "ChatGPT also has amazing potential for supporting people with learning difficulties. [...] However, the difficulty and potentially huge drawback to ChatGPT is this use. The function that makes it helpful is the function that can be incredibly problematic."

Prompting and custom bots

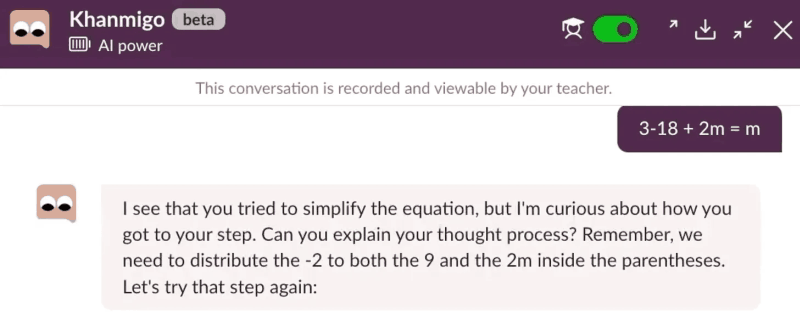

Khanmigo bot giving feedback to a learner about a mistaken step in an algebra problem. Click to expand. Via the Mathworlds Substack

- Chatbots can be given customised instructions and "personas" to prepare them for a specific task. These custom bots do not have additional functionality, but are tailored for a particular task such as talking a learner through a mathematical exercise. They are "re-skinned" versions of existing chatbots rather than a distinct technology, so they have the same pattern of strengths and errors. Khanmigo is Khan Academy's customised ChatGPT for giving feedback to learners. Other custom bots help write code, summarise research papers, or prepare slide presentations. New custom bots appear each week.

- Members of the Network have found students creating customised bots to help with specific courses or aspects of learning.

- Telling a chatbot "Let's think step by step" or "solve this problem step-by-step" seems to be improve answers in some cases. There is a very unpredictable relationship between the way a bot is instructed ("prompted") and its performance. Some academic users have found that telling the bot it will earn tips for good performance actually improves results. Others report benefits from telling the bot that it has taken an adderall tablet! Small variations in the formatting of the input (spacing; capitalisation; whether multi-choice option numbers are bracketed) can have large impacts on performance. (ref 23)

- Some of the predictability of chatbots — in their text style and in the ideas they suggest — can be mitigated by custom instructions. (ref 19) Some LLMs have a "temperature" setting, controlling the amount of randomness in its input, which can be set high to get less predictable responses.

- Ethan Mollick's Prompt Library is a set of example prompts for Higher Education contexts, identifying the bots with which they have been used. These illustrate how much tailoring can go into a prompt to adapt LLMs for a specific purpose, and they can be starting points for subject customisation.

Performance in economics tests and exams

- ChatGPT has Aced the Test of Understanding in College Economics: Now What? by Wayne Geerling, G. Dirk Mateer, Jadrian Wooten, and Nikhil Damodoran, The American Economist, April 2023 (testing ChatGPT 3)

- "While ChatGPT-generated papers have received good grades, they lack the depth of understanding that is expected in higher education."

- "Tools like ChatGPT are likely to become a common part of the writing process, just as calculators and computers have become essential tools for learning mathematics and science. The challenge of universities is to adapt their curriculum to this new reality."

- How to Learn and Teach Economics with Large Language Models, Including GPT by Tyler Cowen and Alexander T. Tabarrok, GMU Working Paper in Economics No. 23-18, 27 March 2023

- "GPTs have not yet fully mastered long chains of abstract reasoning; they cannot "think through" a complex economic problem from beginning to end and provide a comprehensive answer with multiple cause-and-effect relationships."

- "Chat GPT is very good at writing exam questions throughout the curriculum. [...] ChatGPT and Bing Chat will also create very credible syllabi for a variety of courses including readings, course policies, and grading procedures."

- Would Chat GPT3 Get a Wharton MBA? A Prediction Based on Its Performance in the Operations Management Course by Christian Terwiesch, University of Pennsylvania, January 2023

- "Chat GPT3 does an amazing job at basic operations management and process analysis questions including those that are based on case studies. Not only are the answers correct, but the explanations are excellent. [...] Chat GPT3 at times makes surprising mistakes in relatively simple calculations at the level of 6th grade Math. These mistakes can be massive in magnitude."

- GPT-4 in Education: Evaluating Aptness, Reliability, and Loss of Coherence in Solving Calculus Problems and Grading Submissions by Alberto Gandolfi, International Journal of Artificial Intelligence in Education, May 2024

- "[W]hile the current ChatGPT exhibits comprehension of [the task of grading calculus homework" and often provides relevant outputs, the consistency of grading is marred by occasional loss of coherence and hallucinations. Intriguingly, GPT-4's overall scores, delivered in mere moments, align closely with human graders, although its detailed accuracy remains suboptimal.

Plagiarism detection and AI

Section 4 of the Handbook for Economics Lecturers chapter on Prevention and Detection of Plagiarism in Higher Education addresses the implications of AI for plagiarism and different ways in which universities can respond.

Some LLM "detectors" are available, but suffer from false positives, variations in LLM output and the availability of tools that re-write text. (ref 6) University teachers are confident in their ability to tell whether an essay is AI-generated, but do not seem to demonstrate such an ability. (ref 21)

- ChatGPT, assessment and cheating – have we tried trusting students? WONKHE, 20 February 2023

- "We’ve seen some efforts to employ the “detection tool” approach used for other forms of academic malpractices – but every single one of them has been beaten in practice, and many flag the work of humans as AI derived."

- "One of fundamental shifts in assessment is therefore likely to be around defining the level of creativity and originality lecturers expect from students, and what these terms will mean."

- The Rise of Artificial Intelligence Software and Potential Risks for Academic Integrity: Briefing Paper for Higher Education Providers QAA, 30 January 2023

- "Assessments generated by the software tools used by LLMs may take the form of coursework such as essays and dissertations, but also projects, presentations, computer source code and other forms"

- "[W]ork created in this way can be difficult to identify and cannot be picked up by more traditional plagiarism detection tools."

Ethical concerns

Users of LLMs should be aware that:

- Training of the ChatGPT model involved African workers in conditions in which they have been described as "underpaid and exploited". (ref 13)

- LLMs require a lot of computations, which in turn require power and cooling. The data centres running these models have a large water footprint. (ref 10) However, the environmental impact is much less than a lot of activities that we do in the modern world, such as watching streaming video, shopping online, or browsing social media. (ref 24)

- There are also concerns about the carbon impact of the data centres. (ref 11) Attempts to compare the carbon impact of AI-generated content against other ways of creating similar content run into problems of data quality and relevance. (ref 16)

- LLMs are trained on huge sets of text created by human beings. A training set may include the entirety of Wikipedia, StackOverflow, or GitHub. Image generators are trained on art and photography created by human beings. Since these creators are not credited, there are ethical questions around exploitation, copyright, and consent. ChatGPT can potentially infringe copyright by reproducing an existing piece of text or a recognisable image, and it itself cannot tell when it is doing so. (ref 12)

- Since the training data are drawn from the digital world, they reflect the dominance of English and the comparative absence of many indigenous languages. Values and assumptions of the people who contribute most online text shape the default output of the models. LLMs thus perpetuate languages, attitudes, and values of one group of humanity at the expense of the rest. (ref 14)

There is some more info in Hidden Workers powering AI, a March 2023 post on the JiscInvolve blog.

Similar concerns are also raised about other online services — not to mention other features of the modern workplace — but ethical concerns are part of the current debate about the use of LLMs and may even form part of the classroom discussion about whether and how they should be used.

References

Artificial Intelligence and Signal Processing, Tom O'Haver, University of Maryland at College Park, March 2023

Can AI write your Stata code? Owen Ozier, the World Bank, 1 February 2023

ChatGPT Gets its Wolfram Super-powers, Stephen Wolfram, 23 March 2023

List: Here Are the Exams ChatGPT Has Passed so Far, Lakshmi Varanasi, Business Insider, 21 March 2023

GPT-4 is Amazing but Still Struggles at High School Math Competitions, Russell Lim, 24 March 2023

How to detect ChatGPT plagiarism — and why it’s becoming so difficult, Aaron Leong, Digital Trends, 20 January 2023

It is starting to get strange, Ethan Mollick, 2 May 2023 / What AI can do with a toolbox... Getting started with Code Interpreter, Ethan Mollick, 7 July 2023

Introducing Microsoft 365 Copilot, Microsoft 365, 16 March 2023

10 Strategies to Alter ChatGPT's Writing Style, Luke Skyward, PlainEnglish, 16 January 2023

ChatGPT needs to 'drink' a water bottle's worth of fresh water for every 20 to 50 questions you ask, researchers say, Will Gendron, Business Insider, 14 April 2023

Artificial Intelligence Is Booming—So Is Its Carbon Footprint, Josh Saul and Dina Bass, Bloomberg UK, 9 March 2023. See also ChatGPT’s Carbon Footprint Tanushree Kain, SigmaEarth, 12 April 2023

Copyright and ChatGPT, Kirsty Stewart and Hannah Smethurst, Thorntons Law, 1 March 2023 "ChatGPT could subsequently produce material in response to any question by a user, which directly infringes an existing copyright holder’s work. Unfortunately, there is no easy way for users to tell what, if any, of ChatGPT’s responses have been pulled directly from an existing (and protected by copyright) work, nor who the author of this original work is."

Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic, Billy Perrigo, TIME, 18 January 2023

Don’t fret about students using ChatGPT to cheat – AI is a bigger threat to educational equality, Collin Bjork, The Conversation, 5 April 2023; Artificial generative intelligence risks a return to cultural colonialism, Songyee Yoon, VentureBeat, 25 April 2023

Bill Tomlinson, Rebecca W. Black, Donald J. Patterson, Andrew W. Torrance "The Carbon Emissions of Writing and Illustrating Are Lower for AI than for Humans" [preprint] (8 March 2023) arXiv

Kumar, Harsh and Rothschild, David M. and Goldstein, Daniel G. and Hofman, Jake, "Math Education with Large Language Models: Peril or Promise?" (November 22, 2023). https://doi.org/10.2139/ssrn.4641653

"Google's Gemini Advanced: Tasting Notes and Implications", Ethan Mollick 8 February 2024

Meincke, Lennart; Mollick, Ethan R. and Terwiesch, Christian, "Prompting Diverse Ideas: Increasing AI Idea Variance" (January 27, 2024). https://doi.org/10.2139/ssrn.4708466

"Which AI should I use? Superpowers and the State of Play", Ethan Mollick 18 March 2024

Fleckenstein, J. et al. (June 2024) "Do teachers spot AI? Evaluating the detectability of AI-generated texts among student essays" Computers and Education: Artificial Intelligence https://doi.org/10.1016/j.caeai.2024.100209

"Something New: On OpenAI's "Strawberry" and Reasoning", Ethan Mollick 12 September 2024

Sclar, M.; Choi, Y.; Tsvetkov, Y.; Suhr, A. (July 2024) "Quantifying Language Models' Sensitivity to Spurious Features in Prompt Design or: How I learned to start worrying about prompt formatting" Preprint.

https://doi.org/10.48550/arXiv.2310.11324"Using ChatGPT is not bad for the environment", Andy Masley, 13 January 2025