Steve Cook and Duncan Watson, Swansea University

Edited by Edmund Cannon, University of Bristol

Published February 2013

1. Introduction

The analysis of assessment methods is an effervescent aspect of pedagogical inquiry into higher education teaching practices. At the heart of any such analysis must be a clear recognition of the objectives or purpose of assessment. The most obvious function of assessment is as a means of gauging student progress or learning. However, despite the undeniable truth of this statement, a crucial role of assessment is to serve as a means of supporting, encouraging and improving the learning process, rather than simply acting as a method of measuring its extent. Clearly an immediate issue that arises here is the familiar distinction between summative and formative methods of assessment. However, in practice, the border between the two is blurred as all assessment, irrespective of whether it counts towards a final module mark, must clearly seek to develop understanding and assist the learning process. Consequently, consideration of ‘learning’ provides a starting point in the analysis of assessment.

For those not cognisant with the associated ‘learning’ literature, a recommended starting point is Weinstein and Mayer (1986) and their identification of four categories of cognitive learning strategies: rehearsal, elaboration, organisation and comprehension monitoring. Ostensibly, rehearsal refers to the repetition of information; elaboration and organisation emphasise the union of the new understanding with that which preceded it; comprehension monitoring evaluates the knowledge that has been acquired. This variety of options offers significant scope to consider the extent to which they can be embedded within one single methodology, which could provide the instructor with a straightforward system to appraise any single assessment choice. However, whilst it is important to refer to such possibilities, it is not the primary purpose of this chapter to explore them. Instead, such studies and the insights they provide will be recognised as offering a potential structure for assessment design and will be referred as necessary later in this chapter, but will not be considered in detail. Rather, the aim here is to provide an overview of basic ‘summative’ assessment issues, with this then applied to several case studies in order to provoke a debate conducive with the economics instructor further developing the methods that they employ.

To fulfil this aim, the chapter will proceed as follows. In the following section, some general points relating to assessment will be examined. This will involve discussion of the role of technology and the uncertainty associated with its future impact, along with the bigger picture concerning the role of assessment and the limitations and strengths of specific assessment. The following section moves on to consider more ‘structural’ issues, reflecting upon both practicalities and the pedagogical literature. The analysis will then proceed to present specific case studies which not only highlight a range of issues raised previously, but also provide examples of practice and a statistical analysis of the impact of differing assessment schemes.

↑ Top2. Main content

2.1 Focus

The floating bicycle, paper underpants and the book on interplanetary etiquette? These are just a few of the predictions made by BBC’s Tomorrow’s World that went a little awry. To be fair to the programme’s makers, technological predictions are notoriously difficult to stage accurately, and it is that same uncertainty over what will be genuinely useful that governs the consequences of technology for assessment opportunities. However, despite this somewhat uncertain foundation, it remains necessary to address such predictions for technological advancement before this chapter can begin to consider the potential of the various ‘new’ assessment methods.

Whilst there are numerous ways of distinguishing between types of assessment, the standard approach is to make a distinction between summative and formative elements. It is arguably the latter, with assessment designed expressly to further assist the learning environment, which is at the forefront of the technological revolution. With the diffusion of numerous gadgets (such as the smart phone and the iPad) and the development of apps designed to meet specific staff and student needs, individualised feedback is increasingly available. No longer should large class sizes hinder the provision of formative assessment, with interactive exercises becoming ever more straightforward to implement. Data from the ‘one minute paper’, where students are typically asked ‘What is the most important thing you learned today?’ and ‘What is the least clear issue you still have?’, can be easily collected so that the lecturer can react quickly and keenly.

Whilst we can predict that the likes of SMS texting, ‘tweaching’ (see Gerald 2009 for an introduction and elaboration of this method) and subsequent related extensions will continue to play an active role in encouraging interactive lectures, the overall environment is one of immense uncertainty but also one of exciting opportunities. Fortunately for the authors of this chapter, the present analysis is focused on the more stable environment of summative assessment. Its purpose is to consider how assessment can contribute to one ultimate goal in undergraduate teaching: to enable students to think like economists, rather than adopting the more shallow view of economic relations that can be found so readily in poor journalism. Whilst concisely expressed, this is by no means a narrow objective, as the development of an ‘economics skill set’ clearly delivers a range of transferable analytical, quantitative and discursive skills to assist students in a range of non-economics professions or careers. However, developing the ability to think like an economist culminates in a general appreciation of the effectiveness of econometric and discursive assessment tools, as articulated by Santos and Lavin (2004):

‘One way to bring students closer to what economists do is to implement an empirical economics research curriculum that teaches students how to access, chart, and interpret macroeconomic data; search and access peer-reviewed journal articles; and formulate, in writing, positions on economic issues’ (p.148).

It is this summary of aims that motivates the main case study provided in the chapter which recounts the creation of ‘twin’ modules designed to ensure the development of literature reviewing and the practical econometric skills. In addition, given the current league table driven environment that dictates planning in higher education, there is also another goal that should be taken into account when considering the assessment methods employed in these modules. This is pertinently advertised by Grimes et al. (2004):

‘Students with an external locus-of-control orientation, who believe they have little or no control over their environment, are less likely to assume personal responsibility for their course performance and are more prone to blame powerful others or outside factors, such as luck or fate, to explain observed outcomes.’ (p.143).

The possibility that assessment, whilst it must be constructed to meet key learning outcomes, can also directly contribute to the likelihood of the student locating the blame for poor performance at the hands of the instructor, has to be taken into account and minimised. Obviously this is not to say that blame should necessarily be accepted, but rather that via the careful construction of assessment, the issue of blame should not arise. When addressing this particularly delicate complexity, it is not simply the type of assessment employed that is important. Suddenly issues such as the quantity of assessment become increasingly pertinent (avoiding over-reliance on end of period examinations), the quality of the feedback mechanisms adopted become more relevant, and ultimately the distinctions in overall mark derivation are forced under scrutiny (observe how the Applied Econometrics module, as described below in one of the case studies, makes use of ‘best out of...’ to encourage and nurture students’ perception of their ability to have a greater control over their final grade).

2.2 Summary of the structural issues

In a widely influential paper, Walstad (2001) summarises several major factors that impact on the discipline’s undergraduate assessment. It is these factors that are appropriated here to structure the general issues raised by assessment. The following concerns are particularly pivotal: test selection; written versus oral assignments; grade evaluation; opportunities for self-assessment and feedback; testing for higher ordered thinking; and psychology of the economics student.

2.2.1 Test selection

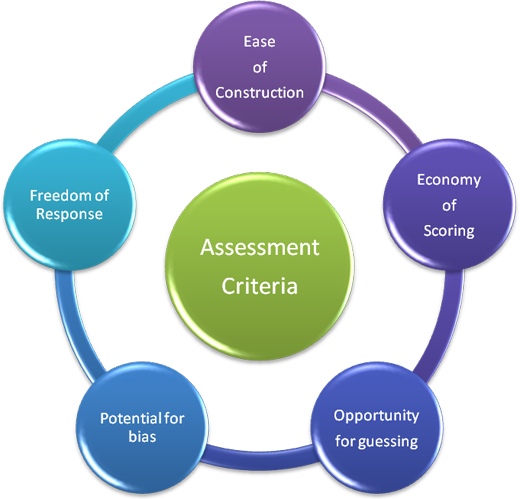

A cursory sweep of standard assessment methods would find reference to the following forms: essays; short answers; numerical problems; multiple-choice; and true-false. There are numerous criteria that then can be adopted to determine the preferred option. Whilst it should be noted that these will be rated differently by the individual assessor, the issues presented in Figure 1 can be considered carefully when designing the most appropriate assessment methods. This is particularly important as there may be conflict between specific criteria.

Figure 1: Choosing a type of assessment

Here, to introduce these key issues, we compare the advantages and disadvantages associated with two common assessment strategies: the multiple-choice examination and the essay-based examination. It should be noted that this choice does not reflect some pedagogical preference for multiple-choice examinations over alternative methods such as short problem-solving questions, as ably discussed further in Walstad (2001), which can provide an excellent means to assess a student’s understanding of economic analysis. Instead, this reflects the choice of our Level 1 Principles of Economics case study where we assess how shifting from a common framework of a combination of multiple-choice and essay assessment to a greater focus on continuous assessment impacts on student achievement.

a) Multiple-choice assessment

Arguably, in this case many a lecturer will be particularly motivated by the ease of the assessment construction. The economy of scoring merely reinforces the convenience of multiple-choice testing, especially when one considers that ‘Principles of Economics’ modules tend to be relatively large. In addition to these two quite persuasive influences that favour the use of multiple-choice assessment, the possibility of subjective grading is removed and therefore students are not left questioning the rationale behind their final mark. Certainly, this simple means of diminishing the possibility of student grievance is a benefit that cannot be underestimated; however it is not a justification for overuse. Unfortunately, the cumulative level of expediency this form of assessment offers, has led to a staple and unquestioning reliance on multiple-choice testing in situations where other variations of evaluation may be equally, if not more, appropriate.

Because of the intricacy involved when measuring the propriety of its use, a couple of issues associated with the use of multiple-choice assessment are considered in more detail below, these being the frequency of assessment and the use of negative marking:

i. Frequency

Misuse (overuse and inappropriate use) has exposed the multiple-choice method of assessment to the possibility of criticism in a variety of forms, the most common of which is that it is a crude instrument of assessment. This would suggest that, not only should it not be the sole means of evaluating student knowledge, neither should it operate as the primary assessment mechanism. Moreover, when it is used there should be meticulous consideration of how its educational value can be maximised. A traditional, annual, multiple-choice exam, whilst providing flexibility to the instructor in terms of allowing a broader coverage of material considered in the lectures, provides limited direct feedback. It also encourages the kind of mnemonically driven learning pathways that can hinder a more flexible and creative response to the material. However, an innovation by Kelley (1973) does offer a system of frequent multiple-choice testing designed to give detailed feedback in a large lecture context. This has reinvigorated the role of multiple-choice and made it particularly attractive as advancements in technology, particularly clickers, facilitate an interactive environment in which student responses can be immediately accessed. Enabling instantaneous feedback and offering opportunities for students to respond to and learn from their mistakes is considered by many leading voices to be crucial for student perceptions of their own learning experience. In relation to this, Light (1992) reports on the types of courses students appreciate (or in his terminology, ‘respect’) and which they feel they are learning most from. With the bonus of unique numbers assigned to individual clickers this revived assessment method can also be used in conjunction with other more practical departmental demands such as attendance monitoring.

ii. Negative marking

Multiple-choice testing is of course notoriously plagued by the nature of gambling odds. Considering the positive opportunities presented by guessing, students can potentially derive marks despite possessing a weak knowledge of the subject material. ‘Negative marking’ is one response to this inherent problem, as a system which punishes incorrect answers to deter guessing. However, such apparent solutions create problems in themselves. Consider, for example, this instance of student feedback obtained by the authors in response to a survey of student opinion:

‘I do not consider negative marking to be fair, with regard to essays that are positively marked… students may not give an answer to a question they are reasonably sure of the answer because they are afraid of getting it wrong and losing marks they have already gained…All the students I have spoken to do not like negative marking as it means the time is a greater constraint as it puts extra pressure on each answer… and mistakes are a greater threat.’

It is more difficult to counteract this psychological effect of negative marking which can actually impinge upon and inhibit the instinctive thought processes of certain student groups. The fact that, for example, different (often unfairly lower) marks will be generated for groups who are on average risk averse and less likely to answer questions deemed to be difficult, introduces the potential for issues of discrimination.

b. Essay-based assessment

The opportunities for guessing discussed above can also be considered in a different context. Particularly effective when constructing a short answer, there can be a tendency in the more confident student to ‘bluff’ knowledge. Unsurprisingly, therefore, to counteract such strategy, lecturers will tend to prefer setting more involved essay questions that will isolate the genuinely studious from the opportunist. Whilst short answers and multiple-choice can be carefully designed to test both comprehension and analytical skills, there is a widespread tendency to view essays as a more versatile and accurate method to measure higher levels of cognitive learning. In terms of Bloom et al.’s six levels of learning, the multiple-choice exam – whilst focused on knowledge and comprehension – can also be carefully designed to partially test application, analysis and evaluation. In contrast, Walstad (2006) summarises how essay questions can successfully cover all levels of learning:

‘An essay question challenges students to select, organize, and integrate economics material to construct a response—all features of synthesis. An essay question is also better for testing complex achievement related to the application of concepts, analysis of problems, or evaluation of decisions. This demonstration of complex achievement and synthesis is said to be of such importance as a learning objective that it is used to justify the extra time and energy required by the instructor for grading essay tests.’

There are, however, numerous pitfalls that should be considered before blindly accepting the essay as the ultimate testing method. Other than the additional pressures on staff time, these include:

i. Unreliability of grading

Questions will not necessarily enable the student to adequately demonstrate the genuine level of their achievement, or facilitate their expression of what they know. Consider, for example, the question ‘How does the monopoly union model compare with the other models of union activity?’ This structure should be considered indistinct on two levels. In the first instance, it is unclear how many models the student is expected to consider. Secondly, the language does not convey the economic criteria that should be used in any comparison being made. That a good student should accurately discern the terminology of an imprecise or ambiguous question is a fallacy, as excellent students are as prone as any to fall into the (mis)interpretation trap. In fact it is fairer to assume that all students regardless of ability are inherently disadvantaged by the chasm between what the examiner expects and yet so often fails to articulate, and what they select as relevant under pressure.

The reaction to problems generated through ambiguity, however, should not necessarily involve being overly precise in the vocabulary used in examination questions. A question such as ‘Does the monopoly union model or the XXX model better describe the UK car industry in the 1980s?’ avoids question ambiguity but arguably becomes a matter of rote learning that allows for insufficient testing of higher order skills or independent reading. Instead pre-examination guidance becomes crucial. The student should appreciate that there is no unique means to rank the relevance of specific economic models. Students who have shown more initiative in their independent reading will then have more to discuss and therefore greater means to demonstrate in-depth knowledge and their ability to meet the module’s learning outcomes.

ii. Scoring

Providing detailed student feedback for essays is inevitably time consuming. There is, and perhaps should not be, any escape from this fact. Indeed most available solutions to the interminable issue of time are inadequate. For instance, team-based marking introduced in order to ensure that temporal demands are minimised, has the potential to interfere substantially with the reliability of the resultant scoring.

iii. Coverage of content

It has been mentioned previously that the multiple-choice test can encompass a much wider range of taught material. In contrast, essay based assessment necessarily encourages uneven content coverage. As essays are generally used in examination periods, this can inadvertently endorse an alternative type of success by chance, where the fortunate students correctly guess which particular subset of module content to revise. This can be particularly prevalent when students are pressured, facing frequent examinations in a short period of time. In these circumstances, anticipating examination content can become a game of chance arising as a result of time constraints with inaccurate prediction of revision topics potentially resulting in dramatic reductions in module marks. Clearly, the structure of degree schemes can impact upon this, with schemes involving a clustering of assessment across a range of modules causing particular problems.

The basic conclusion from this brief description is that there is no single assessment method that is ideal in every respect in all circumstances. The available research does not conclude that any one assessment method is somehow superior in the teaching of economics. All have both advantages and disadvantages, and a combination of assessment techniques must be recommended in order to ensure a system that is, at least, approaching fair. The issue of assessment therefore begins to pivot around programme level variation. Whilst the extensive use of multiple-choice testing in principles of economics courses can be particularly understood, other modules must be flexible and explore the appropriateness of alternative methods. It is only through such variation in assessment practices that students will be able to maximise their performance and fully embrace their learning experience. It is imperative to aspire to such a system for, as has been alluded to previously, it is poor assessment performance that directly influences comparably poor evaluation of the instructor, institution and discipline.

2.2.2 Written versus oral assessment

For certain students, essays can hamper rather than assist self-expression. In response to this, lecturers should consider other methods that allow students more flexibility over how they express their views and critique economic orthodoxy. For example, it is now standard for economics to offer dissertation modules. Such modules allow students to explore more profound levels of writing, where critique becomes the core objective, and time facilitates a sophisticated textual response to the material. Arguably of equal significance, is consideration of how the assessment interacts with learning support material. The ‘problem set’, for example, provides an alternative assessment method that is regularly employed in economics teaching. The main advantage is the clear means it provides to direct the student, as such assessment is typically tied to textbook. However, the dissertation module permits the student to shift away from this comfortable environment and strike out alone into research, as the textbook is rightly sidelined and the student is able to embrace a more eclectic and wide ranging set of economic sources.

To demonstrate a skill set in economics, writing proficiency is clearly vital. However, it should also be noted that a mastery of economics will also encompass a proficiency in speaking skills: ‘speaking ability may be more useful for students because they are more likely to have to speak about economic issues than write about them’ (Walstad, 2001). A similar argument is present in Smith’s (1998) reference to ‘learning by speaking’ with reference to the teaching of statistics. In response to this need, a plethora of approaches is available. Assuming class size is not an issue, case studies can be used promote the oral exploration of economic ideas. Alternatively, but often unpopular with students due to fears of free riding behaviour, are the use of group presentations. This becomes more straight-forward in a business school environment, where economists can utilise their multidisciplinary skills set and be ably supported by business students who are focused on more specific disciplines such as accountancy, marketing and entrepreneurship. The group presentation also minimises the costs to the lecturer in terms of student evaluation. However, careful thought is advised. Siegfried (1998) in a review of US provision in the 1990s, for example, concluded ‘the amount and type of student writing assignments and oral presentations in many programs not only fail to prepare students for the demands they will encounter after graduation, but they also limit the ability of students to demonstrate their mastery of economics while still in college’. (p.67).

It could be argued that the potential of oral assessment has been reinvigorated by the introduction of interactive engagement. For example, more spontaneous activities such as ‘think-pair-share’, where discussion points can be offered by the instructor in order to ignite immediate discussion and critical reasoning. It is interactive engagement which is more effective in generating fundamental conceptual understanding. It also provides an opportunity to develop valuable, quick-thinking, life-skills for the world beyond academia.

2.2.3 Grade evaluation

Once the type of assessment has been determined, perhaps the most time consuming aspect for the economics instructor is the determination of marking criteria. This in itself should not be treated as a unidirectional issue. Being able to describe complex ideas in a short period of time will encourage a specific marking criterion that also celebrates the in-class test. In contrast, if the objective is to enable a more flexible approach to a wider range of topics then reports or presentations may be more apposite.

Perhaps of greater importance is how grading can be used to fully appreciate the student’s economic proficiencies; a particularly pertinent issue for maximising employability opportunities. A reaction, as championed by Walstad (2001), is the use of portfolio assessment. This involves a representative collection of work that more comprehensively displays a student’s progress-in-learning and achievement. Such a study compilation necessarily lends itself to an increased variation in assessment methodology. For example, reflective learning exercises can be regularly employed, as described below in the ‘Topics in Contemporary Economics’ case study presented below. Assessment timings should also be considered, with any example of exit velocity in beginning-of-course and end-of-course marks providing further means to advertise improvements in the student’s ability.

2.2.4 Self-assessment and feedback

It is common within the higher education sector to ensure a rigid separation between the formative and summative elements of assessment. With the chapter’s focus on summative assessment, a clear motivation of assessment becomes the grading of students and therefore the justification for the degree classifications at graduation. However, its practices should not be limited to this core aim:

a. Frequency (again)

Frequent assessment provides a greater opportunity for students to assess their own progress. As mentioned earlier, this is offered in the seminar systems that are typically adopted in principles of economics courses. Regular small multiple-choice examinations, rather than one large exam during key examination periods, afford a means for students to familiarise themselves with their development and adapt accordingly. This is neatly demonstrated by the evolution in undergraduate provision at Swansea University, as summarised in Case Study 1.

Frequent assessment also provides invaluable feedback to instructors, who can respond immediately to the exposed needs by adapting their lecture line-up to augment the effectiveness of their teaching. There are of course also more involved means to provide these opportunities. Walstad (2001), for example, refers to students being asked to keep a journal on current economic events. This can open a continuous dialogue between staff and student that also presents a fertile source for more fluid opportunities for assessment. The use of ‘reflective diaries’ provides a means to further enhance this dialogue and offers more innovative means to assess student progress.

b. Scoring key

Assessment must be seen as more than just testing or grading acquired knowledge with a numerical signifier. Therefore the instructor should always ensure that feedback mechanisms are integral and carefully constructed. By creating a feedback sheet that combines a scoring key with detailed comment, the student is more likely to find feedback valuable at the same time as understanding exactly how the subsequent mark is derived. It is also imperative that the nature of this feedback sheet should be discussed prior to any assessment deadlines to ensure that students are less likely to fall foul of any common pitfalls. Appreciating what is expected is itself part of the learning process, as riddling assessment with snares does not necessarily isolate the gifted. Such discussion about the nature of the feedback sheet also assists instructors in avoiding grading bias, alerts them to the possible ambiguities/misinterpretation of the question and facilitates a simple and direct means to justify the differences in grade margins.

2.2.5 Testing higher ordered thinking: retention

A potentially puzzling result exposed by previous research is the doubt raised over the long-term impact of economics instruction. Stigler (1963), an early critic of teaching in principles courses, posited that if an essay test on current economic problems was given to graduates five years after attending a university, there would be no difference in performance between alumni who had taken a ‘conventional’ one-year course in economics and those who had never taken a course in economics. Trials testing the ‘Stigler hypothesis’ are mixed. For instance, Walstad and Allgood (1999) find that those who possess a background in economics will outperform their non-economic counterparts, but also find that the overall difference in test scores is relatively small.

Given this potential problem posed by knowledge deterioration, testing for higher ordered thinking becomes a vital element of any assessment strategy. Progression is crucial, and this necessitates early assessment that measures whether basic concepts have been mastered, followed by subsequent analysis that is focused on demonstrating whether students have revealed themselves to be ‘thinking like an economist’. This process advocates and reiterates the original work by Hamlin and Janssen (1987), that assessment should be constructed to encourage, if not ensure, ‘active learners’. Such philosophy is founded on the premise that, when students are asked to write in conjunction with reading lecture materials, they are more likely to have a deeper understanding of the concepts and connections between theory and economic outcome. Crowe and Youga (1986), for example, advocate the use of short writing assignments (typically up to 10 minutes written in the lecture room).

2.2.6 Psychology of the economics student

Behavioural economics provides a means to appreciate the theoretical limitations of the assumption of ‘rational economic man’. By referring to the behaviour of the student, it also offers considerable potential for improving the learning experience. One example of this can be found in Rabin (1998), who explores how concepts from behavioural economics can be employed to illuminate weaknesses in the student outlook (such as low attendance and the determinants of poor test performance through inadequate preparation). Allgood (2001) goes beyond the discursive and constructs a utility maximising model based on achieving target grades. Once the grade threshold is achieved, effort falls. This can help us in the appreciation of low attendance or why course innovations may not necessarily lead to improvements in results. There are, however, further lessons to be learnt for assessment practices.

One assessment method which is arguably underused is the application of experimental economics to take advantage of the classroom as forum for competitive economic gaming. This recent innovation has been encouraged by the development of key resources such as Bergstrom and Miller (1997). The underutilisation can be partially explained by the belief that these games, rather than providing assessment opportunities, represent a means to introduce motivational and pedagogical exercises. The first problematic issue is that these mechanisms are arguably reliant on technology and that without that technology finalising grading can be prohibitive. The second difficulty is that there are inherent equity issues raised by generating marks through competitive games. Thirdly, experimental economists have voiced the need for cash payments to ensure clear motivation behind student behaviour. Whilst the higher fees that will be paid by students may offer further opportunities to introduce such activities, the expense involved will not be attractive to departmental heads.

Considering the limited duration of the knowledge instilled by economics teaching, there are also opportunities, through the careful design of assessment, to celebrate and reaffirm the skills that the discipline of economics develops. Walstad (2001), for example, notes how the psychology of investors in the stock market can be used to encourage a perception that economics teaching is an investment rather than a consumption good.

2.2.7 Discussion

In a US data study between 1995 and 2005, Schaur et al. (2012) evaluate the factors determining the choice of assessment methods for US universities. As would be expected, variables such as class size and staff teaching loads are significant determinants of the preference for essays and longer written forms of assessment. Despite these obvious reasons for resisting other methods of assessment the primary goal of any testing should still be to motivate students to think, and therefore, write like economists. Whilst it is vital that an economics programme should utilise a wide range of assessment methods, there are also problems that occur if the bias leans towards the other end of the assessment spectrum. Complete reliance on highly structured tests will not adequately develop the tools required to think and write like an economist either. Ultimately these shorter tests will fail to suitably challenge students and therefore restrict module performances to processes of basic recall and ‘brain-training’. The need for an alternative to both of these extremes of assessment, that unites only their positive aspects, is virtually palpable and it is posited that the case study in the next section may offer one such hybrid solution. The study describes how data analysis can be combined with literature review methodology in a manner that ensures delivery of the required cognitive skills is at the centre of the assessment experience.

2.3 Case Studies

2.4 Concluding remarks and some advice

The above discussion has highlighted the objectives, alternative forms and effectiveness of assessment. From consideration of this, and the case studies presented, a number of issues worthy of consideration for use in improving assessment provision are apparent. The most prominent of these concern ‘student-control’, the frequency of assessment and its relevance. These are discussed in turn below.

Introduction of an element of student control in assessment

The purpose of assessment is to increase knowledge, achieve learning objectives, and generally improve the student experience. However, whatever way this is expressed, the student is obviously at the centre of this and to offer the student some control over their learning outcomes and experiences is clearly to be welcomed. Using ‘the best of’ option in assessment, for example, is one means of allowing students to exercise some control over their assessment by omitting a lower scoring module.

Frequency of assessment and increased engagement

Assessment can clearly be employed as a method of drawing students into a module by assessing understanding and considering independent work to prompt consideration of what is covered and why. To increase engagement, frequent assessment has a clear role to play as evidenced in a more simplistic manner by the level 1 mini-tutorial exercises considered in one case study, and at a higher level 3 with the repeated points of assessment contained in the final-year dissertation case study. By increasing the frequency of assessment students can more fully appreciate the relevance of the material considered and become more involved in their studies.

Making it relevant

This operates at two levels. First there is the ‘content level’. If assessment has a role in ensuring learning objectives are achieved, assessment must therefore have clear relevance. In short, assessment should be able to readily fill the blanks in the following: ‘This assessment considers the use/properties/application of ______, as it is important to understand this because ______.’ Assessment that is dry, overly theoretical or archaic will clearly have a negative impact upon student engagement and performance. Attempts to reinforce relevance of assessment by highlighting real world application or policy relevance clearly provides a means of demonstrating the worth of the material considered thereby motivating students. This is reflected in the case studies above with Applied Econometrics, for example, linking consideration of econometric tools and techniques to analysis and understanding of, inter alia, the housing markets, international inflation dynamics and labour market outcomes.

At another level, relevance of assessment can be considered in terms of the manner in which is undertaken. Again, the case studies above can be considered. If modules are topical, their assessment must be also. For quantitative disciplines, this is most apparent as advances in technology and data availability should be reflected. In short, discussion of methods is not appropriate as a sole means of assessment, when everything required to employ the methods is at hand.

↑ Top3. Where next?

Resources available on the Economics Network website include:

(i) Assessment and monitoring case studies:

http://www.economicsnetwork.ac.uk/showcase/assessment

(ii) Smith, P. ‘Undergraduate Dissertations in Economics’

http://www.economicsnetwork.ac.uk/handbook/dissertations

(iii) Miller, N. ‘Alternative forms of formative and summative assessment’

http://www.economicsnetwork.ac.uk/handbook/assessment/.

For in-depth pedagogical analysis:

Bloom, B., Engelhart, M., Furst, E., Hill, W. and Krathwohl, D. (1956) Taxonomy of Educational Objectives: The Classification of Educational Goals; Handbook I: Cognitive Domain, New York: Longmans, Green.

Weinstein C. and Mayer, R. (1986) ‘The teaching of learning strategies’, in Wittrock, M. (ed.) Handbook of Research on Teaching, New York: MacMillan.

For US analysis into different assessment forms:

Myers, S.C., Nelson, M.A. and Stratton, R.W. (2011) ‘Assessment of the Undergraduate Economics Major: A National Survey’, Journal of Economic Education, Vol. 42(2), pp. 195–9.

https://doi.org/10.1080/00220485.2011.555722

Becker, W. (2000) ‘Teaching economics in the 21st Century’, Journal of Economic Perspectives, Vol. 14(1), 109–19.

https://doi.org/10.1257/jep.14.1.109

Walstad, W. (2001) ‘Improving assessment in university economics’, Journal of Economic Education, Vol. 32(3), pp. 281–94.

https://doi.org/10.1080/00220480109596109

4. Top Tips

When considering different methods of assessment the instructor should also consider issues of frequency.

- As frequency increases, the work burden of the instructor may increase, but the gains in terms of module performance can be substantial.

There is no ‘ideal’ assessment method and degree programmes should be reviewed according to the breadth of the portfolio in assessment methods, with variability in design providing opportunities to enhance student performance.

Oral and written forms of assessment allow for disclosure of life-skills pertinent to the world beyond academia.

Over-reliance on any one assessment form, such as end-of-term examinations, can encourage the perception that students are not in full control over their degree outcome (potentially harming student opinion toward the quality of the learning experience).

Retention of knowledge, demonstrating higher ordered thinking, should be a critical aspect of assessment choice.

5. References

Allgood, S. (2001) ‘Grade targets and teaching innovations’, Economics of Education Review, Vol. 20 (5), pp. 485–93. https://doi.org/10.1016/S0272-7757(00)00019-4

Bergstrom, T.C. and Miller, J.H. (1997) Experiments with Economic Principles, New York: McGraw-Hill.

Crowe, D. and Youga, J. (1986) ‘Using writing as a tool for learning economics’, Journal of Economic Education, Vol. 17, pp. 218–22. http://www.jstor.org/stable/1181971

Dynan, L. and Cate, T. (2009) ‘The impact of writing assignments on student learning: Should written assignments be structured or unstructured?’ International Review of Economics Education, Vol. 8(1), pp.62-86. http://economicsnetwork.ac.uk/iree/v8n1/dynan.pdf

Ethridge, D.E. (2004) Research Methodology in Applied Economics, Oxford: Wiley-Blackwell.

Gerald, S. (2009) ‘Tweaching: Teaching and learning in 140 characters or less’, SIDLIT Conference Proceedings. Paper 14. http://scholarspace.jccc.edu/sidlit/14.

Grimes, P., Millea, M. and Woodruff, T. (2004) ‘Grades: Who's to blame? Student evaluation of teaching and locus of control’, Journal of Economic Education, Vol. 35(2), pp. 129–47.

https://doi.org/10.3200/JECE.35.2.129-147

Hamlin, J. and Janssen, S. (1987) ‘Active learning in large introductory sociology courses’, Teaching Sociology, Vol. 15, pp. 45–57. http://www.jstor.org/stable/1317817

Hendry, D. (2004) ‘The E. T. Interview: Professor David Hendry’, Econometric Theory, Vol. 20, pp. 743–804.

https://doi.org/10.1017/S0266466604204078

Hughes, P. (2007) ‘Learning about learning or learning to learn’. In A. Campbell and L. Norton (eds), Learning, Teaching and Assessing in Higher Education: Developing Reflective Practice (pp. 9–20), Exeter, England: Learning Matters.

Kelley, A. (1973) ‘Individualizing education through the use of technology in higher education’, Journal of Economic Education, Vol. 4(2), pp. 77–89. http://www.jstor.org/stable/1182257

Kolb, D. (1984) Experiential learning experience as a source of learning and development, New Jersey: Prentice Hall.

Kolb, D. and Fry, R. (1975) ‘Toward an applied theory of experiential learning’, in Cooper, C. (ed.) Theories of Group Process, London: John Wiley.

Light, R. (1992) The Harvard Assessment Seminars, Cambridge, MA: Harvard University Graduate School of Education and Kennedy School of Government, Second Report.

Rabin, M. (1998) ‘Psychology and economics’, Journal of Economic Literature, Vol. 36(1), pp. 11–46. http://www.jstor.org/stable/2564950

Rich, M. (2010) ‘Embedding Reflective Practice in Undergraduate Business and Management Dissertations’, International Journal of Management Education, Vol. 9(1), pp. 57–66.

https://doi.org/10.3794/ijme.91.216

Santos, J. and Lavin, A. (2004) ‘Do as I Do, Not as I Say: Assessing outcomes when students think like economists’, Journal of Economic Education, Vol. 35, 148–61.

https://doi.org/10.3200/JECE.35.2.148-161

Schaur, G., Watts M. and Becker, W. (2012) ‘School, department, and instructor determinants of assessment methods in undergraduate economics courses’, Eastern Economic Journal.

https://doi.org/10.1057/eej.2011.20

Schön, D. (1986) The Reflective Practitioner, New York: Basic Books.

Siegfried, J (1998) ‘The goals and objectives for the undergraduate major’, in Walstad, W. and Saunders, P. (eds), Teaching Undergraduate Economics: A Handbook for Instructors, (pp. 59-72), New York: McGraw-Hill.

Smith, G. (1998) ‘Learning statistics by doing statistics’, Journal of Statistics Education, 6, paper 3. http://www.amstat.org/publications/jse/v6n3/smith.html.

Stigler, G. (1963) ‘Elementary economic education’, American Economic Review, Vol. 53(2), pp. 653–59. http://www.jstor.org/stable/1823905

Todd, M., Bannister, P. and Clegg, S. (2004) ‘Independent inquiry and the undergraduate dissertation: Perceptions and experiences of final-year social science student’, Assessment & Evaluation in Higher Education, Vol. 29, pp. 335–55.

https://doi.org/10.1080/0260293042000188285

Walstad, W. (2001) ‘Improving assessment in university economics’, Journal of Economic Education, Vol. 32(3), pp. 281–94. https://doi.org/10.1080/00220480109596109

Walstad, W. (2006) ‘Testing for depth of understanding in economics using essay questions’, Journal of Economic Education, Vol. 37(1), pp. 38–47.

https://doi.org/10.3200/JECE.37.1.38-47

Walstad, W. and Allgood, S. (1999) ‘What do college seniors know about Economics?’, American Economic Review, Vol. (2), pp. 350–54. https://doi.org/10.1257/aer.89.2.350

Weinstein C. and Mayer, R. (1986) ‘The teaching of learning strategies’, in Wittrock, M. (ed.) Handbook of Research on Teaching, New York: MacMillan.

Wiberg, M. (2009) ‘Teaching statistics in integration with psychology’, Journal of Statistics Education, Vol. 17, paper 1. www.amstat.org/publications/jse/v17n1/wiberg.html.

↑ Top