Contents

Background

Economic Methods is a first-year quantitative module taken by approximately 300 single- and joint-honours students at Durham University. It covers essential topics on data analysis ranging from data summary and visualization, introducing hypothesis testing, simulations, and bivariate regression. The module is assessed summatively by a data analysis project and a year-end exam.

Before the start of the COVID pandemic, I tended to use in-class quizzes and traditional problem sets for immediate student feedback. Whilst these provide some preparation for the exam, they would not serve well for preparing students for the project. Given the importance of data analysis skills for this module and beyond (e.g., labor market[1]), I have been supplementing the lectures with computer practical sessions in which students gain first-hand experience in data analysis.

The computer practical sessions involved a demonstration part and then the students were encouraged to work on a task that paves the way for the data analysis. All these feedback mechanisms and the computer practical sessions relied heavily on face-to-face interaction to provide prompt feedback so that a misunderstanding would not create a “snowball effect”. That is a misunderstanding or a gap in an early topic that would affect the students’ engagement and learning experience for the future topics. Therefore, it is important to tackle such issues as early as possible.

With the onset of the pandemic, the lectures and the computer practical sessions had to be offered asynchronously. In the 2019/20 academic year, I adopted online quizzes that were embedded in the lecture recordings. Yet the students’ feedback indicated these did not provide enough preparation for the exam and “more practice” would have been better. Similar comments were made for computer practical sessions. Whilst the exam performance was not meaningfully different from past cohort’s exam performances, there was a significant distributional shift to the lower tail for the data analysis project for this cohort. I decided to change the feedback mechanism so that students would get prompt and meaningful feedback.

New Design

In 2020/21 I redesigned the asynchronous material so that it could support hybrid teaching in which a lecture is delivered both face-to-face and online. The new design introduced the following elements:

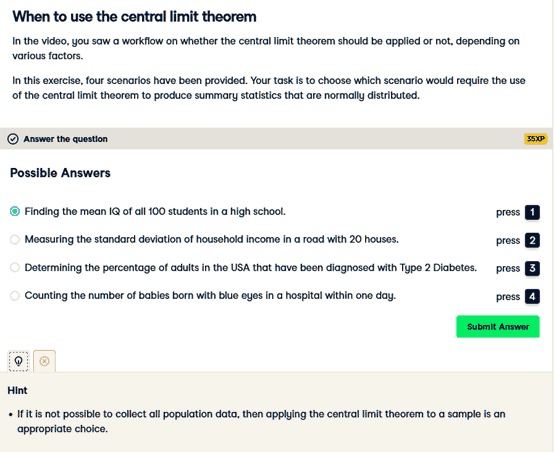

Instead of using embedded quizzes in the virtual learning environment (VLE) or a test bank, I used questions based on real data sets. I designed these tasks with DataCamp resources. DataCamp provides data training tools and courses mainly for businesses. Unlike the test bank questions, DataCamp allows us to use real life data sets and customize the questions based on them. As I provided the theoretical background during the lectures, I picked various data sets and question types to allow the students to see the application of theory in different ways. After each topic, the students could do around 10-12 quizzes, each containing around 4-5 questions. On completion of each quiz, the students received feedback including the explanation of the answer. Rather than just true or false feedback, I used the submission correctness testing (SCT) feature to provide individualised feedback. This feature allows for automatic feedback for each step. It also allows each question to provide hints if students need it. Figure 1 below is an example snapshot of a multiple-choice question.

The contents of the quizzes are chosen mostly from DataCamp questions; however, for some topics I created my own questions which is allowed in DataCamp.

Figure 1: A multiple-response question in DataCamp

- The computer practical sessions contained more practice to prepare the students better for the assessed project. To that end, I incorporated more open-source data sources for these sessions. One particularly useful source was Kaggle Datasets. Each session contains either a big project or two mini-projects based on a dataset. Each project could be completed using the Analysis ToolPak in Excel. Excel was recommended here due to ease of use. However, each project could be carried out by using another programming language such as R or Python. Parallel instructions were provided to those who would like to use an alternative language such as R.

- Some of the theoretical topics such as the Law of Large Numbers and the Central Limit Theorem were explained with the help of simulations using the Monte Carlo simulation method. In the final computer practical session, the students had a chance to simulate asset returns and to forecast the returns.

Discussion

After the implementation of the changes, the distribution of marks for the project improved significantly, though not exactly matching the pre-pandemic level. The student module evaluation questionnaire indicated extremely positive feedback for the changes implemented. In particular, the immediate feedback provided and the number of quiz questions were positively commented on. However, some students experienced problems with the above-mentioned tools, in particular DataCamp.

Two issues worth mentioning are: (i) compatibility problems and (ii) user problems. To the best of my knowledge, common VLE platforms used in UK higher education are not compatible with the external resources. I solved this issue by creating a DataCamp class for the module and admitting the students into this class. The user problems could come in various forms. Therefore, it would be better to have an introductory session on how to access and use the resources. In the later phase, the number of issues reported were significantly decreased. Another concern might be the pricing of these resources. Luckily, both platforms offer free access with a university credential such as university email.

Notes

[1] See Jenkins and Lane (2019) for details.

References

Cetinkaya-Rundell, M. and Ellison, V. (2020) "A Fresh Look at Introductory Data Science". Journal of Statistics Education. https://doi.org/10.1080/10691898.2020.1804497.

Jenkins, C. and S. Lane (2019) "Employability Skills in UK Economics Degrees" The Economics Network https://doi.org/10.53593/n3245a

↑ Top